Registering Explicit to Implicit:

Towards High-Fidelity Garment mesh Reconstruction from Single Images

Heming Zhu1,2, Lingteng Qiu1,2, Yuda Qiu1, Xiaoguang Han1,3,*

*Corresponding email: hanxiaoguang@cuhk.edu.cn

1 SSE, The Chinese University of Hong Kong, Shenzhen

2 Shenzhen Research Institute of Big Data

3 FNII, The Chinese University of Hong Kong, Shenzhen

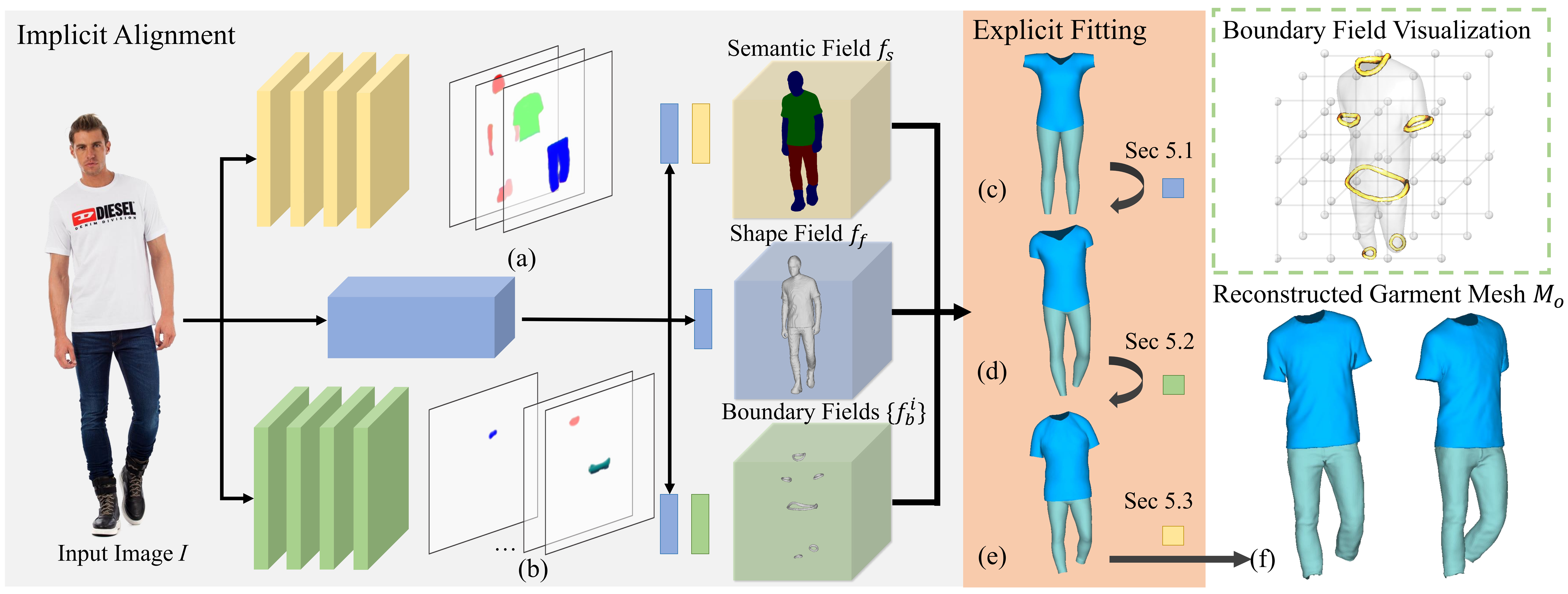

Figure 1: Given a single in-the-wild clothed human image, our model, ReEF, can generate high-fidelity and topology-consistent layered garment meshes. The appearances of the reconstructed garments are well aligned with the input image. Moreover, the produced garments meshes can be adopted for further content creation, e.g., placed on other virutal characters.

Overview

Fueled by the power of deep learning techniques and implicit shape learning, recent advances in single-image human digitalization have reached unprecedented accuracy and could recover fine-grained surface details such as garment wrinkles. However, a common problem for the implicit-based methods is that they cannot produce separated and topology-consistent mesh for each garment piece, which is crucial for the current 3D content creation pipeline. To address this issue, we proposed a novel geometry inference framework ReEF that reconstructs topology-consistent layered garment mesh by {Re}gistering the {E}xplicit garment template to the whole-body implicit {F}ields predicted from single images. Experiments demonstrate that our method notably outperforms the counterparts on single-image layered garment reconstruction and could bring high-quality digital assets for further content creation.Download

If you are interested in our your work, please consider citing our paper!

1 | @InProceedings{Zhu_2022_CVPR, |

Results(From Internet Images)

Pipeline

Figure 2: The pipeline of our proposed approach. (a) The semantic attention maps. (b) The boundary attention maps. (c) The explicit template mesh. (d) The pose deformed template mesh. (e) The boundary deformed template mesh. (f) The output layered garments.